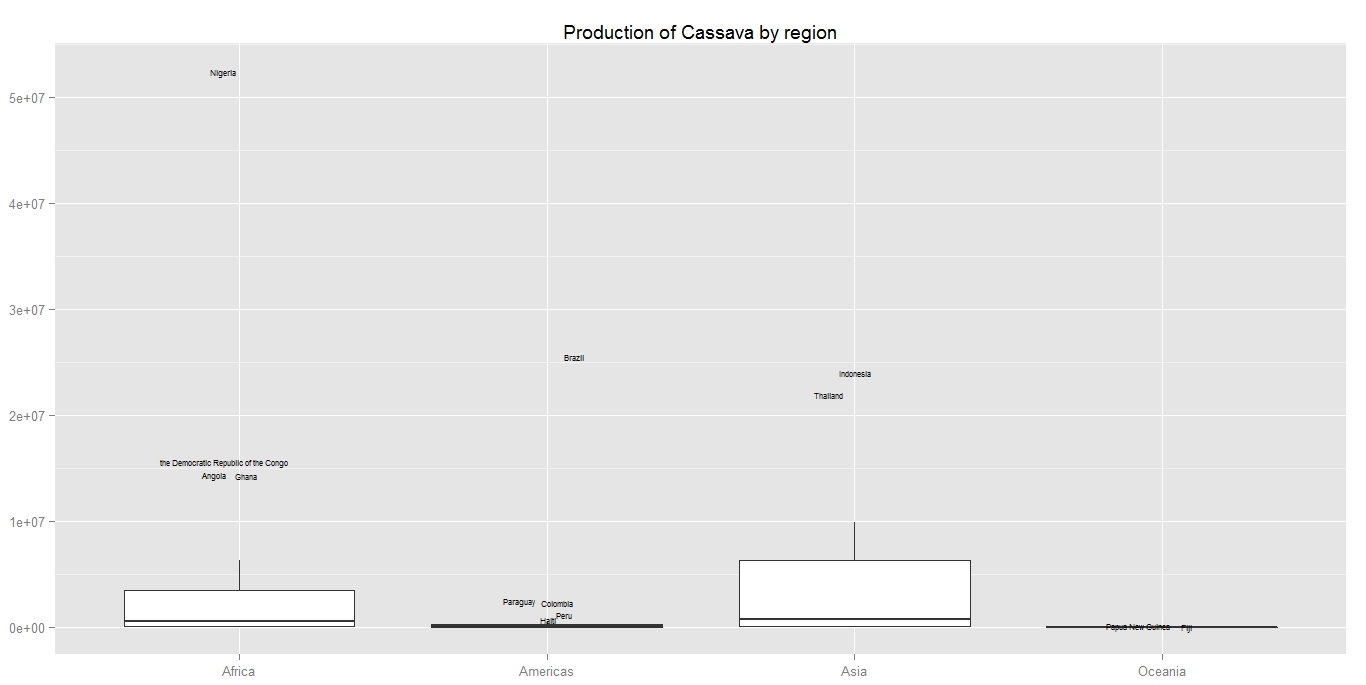

Following my last post about the maize network, although interesting but is not very informative. What we are going to do today is to contrast the maize network with the wine trade network.

The choice why we have chose wine will become clear after the network and the analysis. Lets first have a look at the trade network of wine, again only the top 80% of the trade has been shown.

First, we can see that the wine network is much more inter-connected than the maize network. Secondly, the amount of reciprocal trading is much higher. Third, there does not appear to have a single trading of the size of the magnitude such as the export of maize from United state to Japan and Korea

The advantage of the network diagram not only reveals the connection, but the structure of the network, hence the nature of the market. It is clear that the market for maize and wine is different, but how, and why, is what we are interested.

Usage/consumption:

First of all, the usage of the two commodity are on the opposite end of the spectrum. Maize is a staple crop, necessary in many regional cuisine also used for livestock feed and bio-fuel. On the other hand, wine is consumed at a lesser extent and is considered as a luxury or enjoyment (although considered a necessity in Italy and several other countries).

Cost:

The usage of the commodity lead to the difference in cost and elasticity. Staples and biofuel need to be cheap to be viable and thus cost is an important consideration. In contrast, the preference and quality of wine plays a much bigger role than the actual price tag. This is where the trade agreement plays a significant role in promoting the trade of maize between the United States, Japan and Korea but a smaller element for wine trading.

Value added/preferences:

Finally, the variety and the value added of the commodity characterizes the trade. Japan can import maize from any country in the world to use for consumption, feed or to produce bio-fuel without any considerable change or impact because the value add of maize is small; simply put, the product can be easily substituted. On the other hand, wine is dominated by several producers such as France, Italy and a handful of new world wine producers. Which is not easily substituted given the preferences. This is the contributing reason to the high number of trading partners, interconnected clusters and reciprocal trading; since a single trading partner can not satisfy the preferences of the consumer and even the French would like to have Italian wine occasionally.

This example illustrate how a simple network diagram can reveal a lot of interesting insight compare to analyzing just the quantity and value of trade, it also supports my view on how important exploratory analysis is.

## Download the data

wine.df = read.csv("https://dl.dropbox.com/u/18161931/wine_trade.csv", header = TRUE,

stringsAsFactors = FALSE)

## Take only the trade

wineEx.df = subset(wine.df,subset = type == "Export",

c("reporting_country", "partner_country", "Wine"))

## Sort the data and take only the top 80% of the trade

wineEx.df = arrange(wineEx.df, desc(Wine))

wineEx.df$sWine = scale(wineEx.df$Wine, center = FALSE)

wineEx.df$cs = cumsum(wineEx.df$Wine)

wineEx.df[wineEx.df[, 1] == "China, Hong Kong SAR ", 1] = "China, Hong Kong SAR"

wineFinal.df = subset(wineEx.df, cs < tail(wineEx.df$cs, 1) * 0.8)

## Set edge and arrow size

wineFinal.df$edgeSize = with(wineFinal.df, Wine/sum(Wine))

wineFinal.df$arrowSize = ifelse(wineFinal.df$edgeSize * 30 < 0.5 , 0.5,

wineFinal.df$edgeSize * 15)

## Create the network and plot

wine.net = network(wineFinal.df[, 1:2])

plot(wine.net, displaylabels = TRUE, label.col = "steelblue",

edge.lwd = c(wineFinal.df$edgeSize) * 100,

arrowhead.cex = c(wineFinal.df$arrowSize),

label.cex = 0.5, vertex.border = "white",

vertex.col = "skyblue", edge.col = rgb(0, 0, 0, alpha = 0.5))